2023 CIS Juniper Benchmark Updates

2023 is upon us and the New Years “fogginess” is finally clearing a bit….

…. so time for an update on the Center for Internet Security Juniper OS Benchmark and what the Juniper Community has planned for this year.

Summary

Talking of New Years Resolutions…. if I am guilty of any indulgence, it is verbosity (in fact, I boot everyday with -vvvv mode) - so I will start of with a Summary, and you can choose how much else you want to read ;)

Version 2.2.0

Minor update to current version, which was published back in 2020

Add new features/options

Review current recommendation

Target Feb 2023 for Consensus start

Target March 2023 for Publication

Version 3.0.0

Major update

Will add new Level 1 and Level 2 Profiles for different Device Families (EX, SRX, MX, etc)

Better reflect differences between device security for a Switch, Router or Firewall (for eg)

Account for platform differences in a clearer way

Target Q3/4 2023 for Consensus start

Target Q4 for Publication

Hang on, CIS BenchWhatNow?

If this is the first time you are hearing of CIS Benchmarks, don’t panic. If you know all about it, feel free to skip this bit.

I will write a more detailed blog soon on CIS and the various programmes they run like MS-ISAC/EI-ISAC or CIS Critical Security Controls. But as I am terrible at remembering to come back and do follow up blogs - let’s do a bit of background here.

You could also check out the talk that I gave at the Bristech Conference in 2018 (which is now a bit out of date, particularly around the Controls). Youtube link in the side bar of this post.

Who are Center for Internet Security (CIS)?

The Center for Internet Security (CIS) is an independent, community driven, non-profit organization which aims to make the connected world safer.

CIS was setup in 2000 with co-operation from ISACA, the SANS Institute, (ISC)², the American Institute of Certified Public Accountants (AICPA) and The Institute of Internal Auditors (IIA) - and you know it’s serious when the Bean Counters are involved - to help provide the Internet Community with robust Security Configuration Hardening best practice.

This best practice is provided, for free, through the CIS Benchmarks, which now cover over 100 different guides including for Linux (in various distros), Windows, Apple iOS, Android, Docker, Exchange Server and network devices from Cisco, Juniper, Fortinet, Checkpoint and others.

CIS SecureSuite Members contribute to the running of CIS and also benefit from a number of additional tools, like the CIS Configuration Analysis Tool, to help audit and evaluate their current configurations against the Benchmark standards.

Since then, the membership has grown significantly as have the number of resources that CIS provides. This includes the CIS Critical Security Controls, which were formally managed by SANS as the SANS Top 20 Controls, and providing CIS Hardened Images - Virtual Machines pre-hardened using the appropriate CIS Benchmark and available directly through the AWS, Azure, GCP or Oracle Cloud Marketplaces.

How Benchmarks are Developed (get involved!)

CIS Benchmarks, and all CIS projects, are community driven. This really is the core of the whole organization.

So rather than just one or two authors being employed to come up with secure configuration guidance for a given platform, CIS hosts a Benchmark Community for each Benchmark that is going to be produced. No Benchmark for a system you are an expert on yet? You can suggest one and form a community to produce it.

Each Benchmark forms a set of detailed, technical steps (Recommendations) to harden and audit the target platform, along with background information on why this is recommended.

Recommendations are split across two Levels:

Level 1 - Basic steps which should generally be applied in any deployment and should not have undesirable side affects. For example, using SSH rather Telnet for CLI access to a given device.

Level 2 - More complex, demanding or intrusive steps that should be considered for high security environments.

Level 2 Recommendations may break functionality and require extensive testing.

For example, requiring that only strong cryptographic algorithms be allowed for SSH access is done at Level 2. This raises security significantly, but may be impact compatibility with SSH Clients or Management/Automation Services, so requires interop testing, particularly if automation like NETCONF or Ansible is in use.

Benchmarks are published for free by CIS, who also make free audit tools audit tools, with some additional tools available to paid SecureSuite members. In addition, many 3rd Party Audit/Compliance systems (such as Tripwire or ManageEngine) can assess and monitor compliance with CIS Benchmark Recommendations.

Benchmark Communities are made of Subject Matter Experts (SMEs) and real world users of the platform. Often experts from the vendor of the system and CIS Staff will also be involved, but they do not have any greater control or input than any other member.

Community Members may come from organizations who are CIS SecureSuite Members, or from the wider Internet Community by signing up as an Individual (as I did originally) by going to https://workbench.cisecurity.org/ and registering.

As a Community Member, you are able to directly contribute to the Benchmarks that interest you by raising tickets for any errors you spot or things you think are missing.

Community Members who contribute to Benchmarks are credited when it is published and can earn Continuing Education Credits (CPE) for Security Certifications such as CISSP, C|EH/C|ND or SANS GIAC.

Editors, CIS Leaders and Consensus

Tickets raised by Community Members are open to comment by the whole Benchmark Community, so everyone is able to discuss the merits of any proposed change or addition.

Once an agreement has been reached, or if there is no real discussion because the ticket is fixing something that is obviously an error, then one of the Editors/Authors for the Community will update/add the relevant Recommendation ready to be included in the next release.

Editors/Authors are volunteer members of the community, who have undertaken a bigger commitment to the project. They will work through all of the tickets as they come in and write any content that is needed. Generally they will also write new Benchmarks, taking input from the community, but also creating the initial text ready for comment.

I have been the Editor/Lead Author for the Juniper Benchmark Community since ~2008/9, having been involved with other CIS Benchmarks and Beta testing of CIS-CAT for some years prior. At the time the only CIS benchmarks for network systems was for Cisco IOS and, as I kept raising that we should have one for Juniper, I was asked if I would like to write one as a starting point.

That first Juniper Benchmark was published in July 2010. The most recent version, v2.1.0, was released in October 2020.

New major revisions tend to be quite extensive projects (moving from v1.x to v2.x took many months of work, and involved a systematic review of every single recommendation). Minor releases are a bit less involved. They tend to be triggered when either a serious error, omission or change of guidance is required or when a number of more minor issues have been raised as tickets.

Either way, before a Benchmark can be Published a formal Consensus Process needs to take place. This is a call to action managed by a member of CIS Staff who acts as the Benchmark Leader, in conjunction with the Benchmark Editors. The aim is to inform less active members of the Benchmark Community and, as much as possible, the wider user base for the platform that:

a) a new version is ready to be published

b) that it is now open to for review

c) that there is a fixed time frame

Normally consensus will last for 2-4 weeks.

If no significant issues are identified, the new Benchmark release will then be published. This will potentially trigger updates to other standards, such as NIST STIGs/Checklists or CIS-CAT, which are based on CIS Benchmark Recommendations.

Updates to Current Version

The current version of the Juniper Benchmark (ver 2.1.0) was published in November 2020 after an extensive review, with a lot more specific guidance given and a number of gaps filled.

However, with Covid and chaos, time has moved on and it is time for another minor update before we get on with the more significant changes as we move to Version 3.0.0 later in the year (see later in this post).

There are a few reasons for doing a minor update/refresh before we start on Version 3:

New Features:

Since 2020 Junos has gone through multiple major releases, introducing new features and options which need to be accounted for.

For example, Recommendation 6.3.1 Ensure external AAA is used and Recommendation 6.3.2 Ensure Local Accounts can ONLY be used during loss of external AAA deal with how Login Authentication is performed, requiring that users are authenticated against a Central AAA source rather than as Local users.

Since this recommendation was written, an additional option has been made available for some platforms - using LDAP over TLS for User Authentication (for example against Active Directory) so the recommendations need to be updated to reflect this change.

At the time of writing this blog - Feature Explorer only shows support for ACX710, but the Junos documentation on the feature suggests wider support.

I have never had cause to use LDAPS for Login Authentication in production, so I will need to do some testing (probably in VLabs - have I mentioned VLabs? it’s great, it’s free - I will do a separate blog about it soon!) to confirm before adding the changes to the recommendation.

Things Change in Security:

The next reason for doing a minor update is for the Juniper Community to review the existing recommendations and make sure that they still stand.

Security is a Journey not a Destination…. and other trite trueisms aside, the goal posts do move.

It wasn’t that long ago (certainly during my time looking after the Junos Benchmark, which goes back to 2008/2009) where MD5 or SHA1 were considered perfectly acceptable, or even highly recommended, Hashing Algorithms for things like HMACs in IPSEC VPNs or Signing X.509 “SSL” Certificates.

But they are definitely not recommended algorithms anymore. For example Mozilla began the long process of phasing out support SHA1 for Certificate Signing back in 2014 (see this excellent Mozilla Security Blog Post for more).

So, it is essential with any Benchmark to review recommendations periodically, especially when it comes to crypto standards, key lengths, etc.

Something Missing and a New Consensus:

The final reason for doing a minor update before we start on the more in-depth process for Ver3, is that we are bound to have missed something….. I miss things, and we as a community probably miss things too.

This is why we have the Consensus Process and why all CIS Benchmarks are community driven. We want to get as many eyes on the revisions as possible both now and during the formal consensus process so we can:

Add coverage for new featues

Review current guiance for changes

Be alerted to anything that we have missed in the current release

We are targeting early Feb 2023 to start the Consensus Process for the CIS Juniper OS Benchmark Ver2.2.0

Assuming Consensus is reached in a typical time, that means the new version should be released in late February or early March 2023.

By including these in a minor release, we can then get on with a more major overhaul in version 3….

Major Refresh - Ver 3.0.0

One of the things I have always loved about Juniper systems is the concept of “One Junos”…. it sounds a bit Lord of the Rings now I write it down, but the idea was that the software for all Junos platforms is compiled from the same Code Base and follows the same release train.

In practical terms, this means that if you know how to configure a feature on one platform, say OSPF on an MX Router - then you also know how to configure the same feature on any other Junos platform that supports it. And for the most part this is still the case.

When we released Version 1 of the Juniper Junos Benchmark in 2010, we were focused on the Routing platforms (M, MX and T Series at the time).

The SRX was still very new, and not very widely adopted, and EX was likewise quite new (and differed quite significantly from the Routers). But as we continued to develop, thanks to the “One Junos” philosophy from Juniper (which with the massive effort in disaggregating Junos from the hardware has only got better over time), most of the recommendations continued to apply equally across the various different platforms so these were included, without being branched out into separate, product specific Benchmarks.

Platform Specific Mess

This works fine for core/common features, like Routing Protocols and management services (SSH, NETCONF, JWeb, etc) with differences being handled with notes within the Recommendation.

For example applying a “ProtectRE” Firewall Filter to the Loopback interface to screen Exception Traffic (traffic going to the Routing Engine, like SSH session or BGP Updates) is equally applicable across the current routing (MX, PTX and ACX), switching (EX, QFX) and (to a degree) firewall platforms (SRX).

However, there are some important caveats as to how it impacts traffic or what choices exist between the different device types.

For the routing platforms, this filter will apply to In Band Management traffic (traffic received on a Revenue Port in the Data/Forwarding Plane, and forwarded internally to the RE) and to Out of Band Management traffic (traffic sent directly to the RE on the Fxp0 OOB Management Port).

On the switching platforms however (QFX/EX), the opposite is true. If you want to screen OOB management traffic you also need to apply a Firewall Filter to Me0 (the switch version of the RE OOB Management Port). I have no idea why this difference exists (if you know the reason, please comment!), but it does.

For the SRX Firewalls, In-Band Management traffic may be better managed, (for the most part) by using Security Policy to the junos-host Security Zone rather than a stateless Firewall Filter. Again, OOB traffic would use the Firewall Filter still though.

Historically, these kinds of platform discrepancies have been handled using notes on the relevant Benchmark Recommendations. And one of the things I am keen to update in V2.1.1 (probably first ticket I am going to be raising) is to update the text to make this a lot clearer.

But, this is becoming increasingly messy. And it makes creating simple Audit Conditions much more difficult - particularly relevant as we move towards making Automated Auditing easier.

Adding Structure - Reducing Mess

Because of this increasing complexity in the Benchmark text and Auditing procedure due to handling these differences within a single Benchmark, we have been looking for over a year at how we can do it better in the future. And this is what Version 3.0.0 will be for.

We have previously considered splitting the Juniper Benchmark out into a series of separate Benchmarks - perhaps one for Routers, one for Switches, one for Firewalls, or perhaps one per platform. This has been done for some other Benchmark Communities, notably the Cisco Benchmarks within the networking space.

But with the “One Junos” approach by Juniper, a vast majority of recommendations would be the same across all of the Juniper Benchmarks - if you change recommendations for SSH version on an MX Router, you are going to also want to apply that to an EX Switch or an SRX Firewall.

So any update to one of these Common Recommendations raised in one Benchmark, would have to be synchronized across all of the others - with a consensus and release process needed for all of them.

From an Editors/Community point of view, that is adding a lot of maintenance headache and lots of possibilities to make new and exciting mistakes in the future.

We did consider creating a “Junos Common” Benchmark, and then specific “Annex” Benchmarks for each platform which would have the platform specific settings - but even this would create a lot of complexity where a Recommendation applies to most, but not all, platforms or applies differently to different platforms (like the ProtectRE example given earlier).

For End Users, that is going to get really confusing as well.

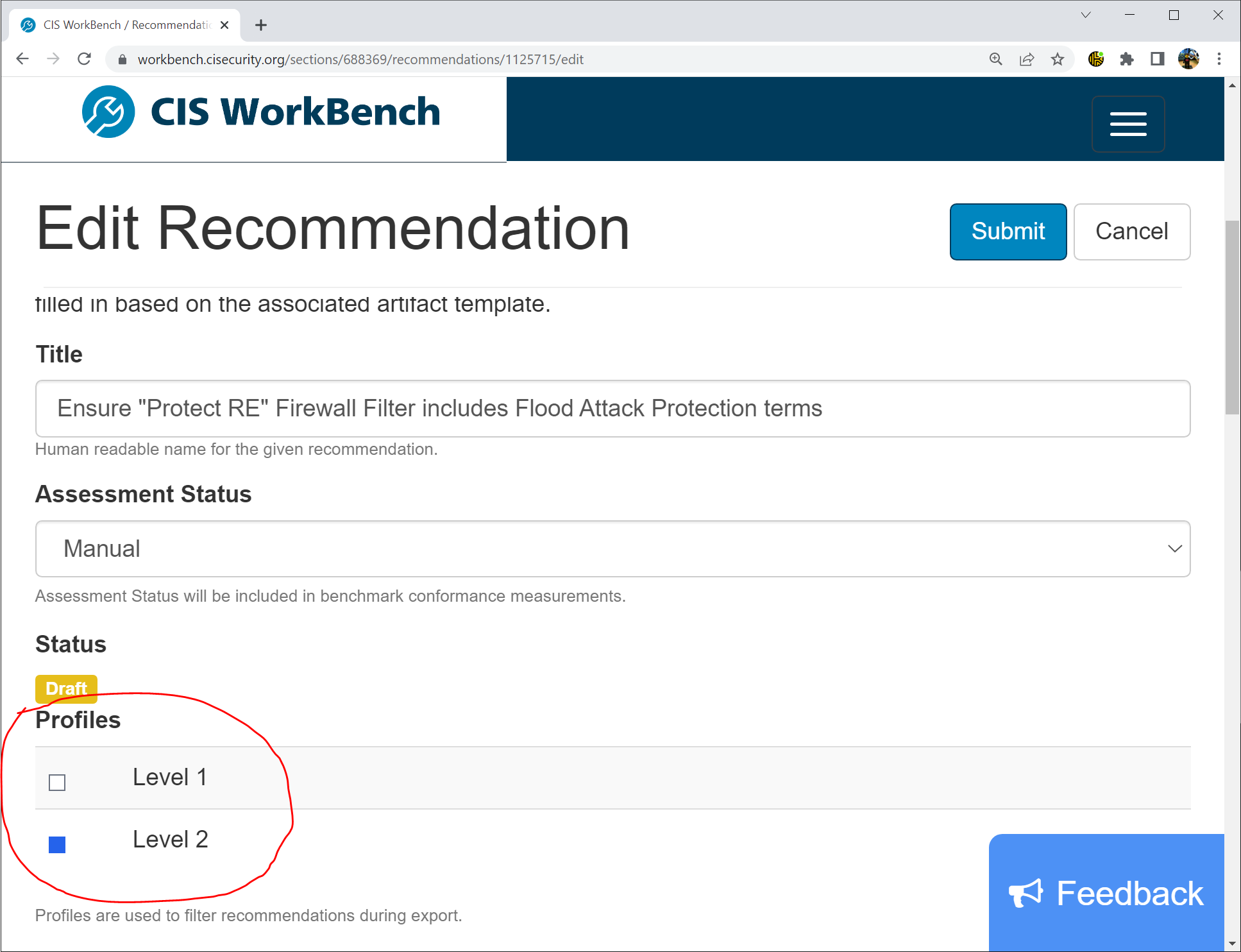

Instead we are going to use an existing feature of Workbench and Benchmarks - Profiles.

Profiles, Profiles, Profiles…

Profiles are collections of Recommendations. We already use two Profiles in the Juniper Benchmark:

Level 1 Profile

Level 2 Profile

If you are looking to secure a Junos Device with basic hardening standards you can audit or apply just the Level 1 Profile. If you are looking to secure a Junos Device for a High Security environment, you should review the Level 1 and the Level 2 Profiles together.

A Recommendation is tagged by the Editor when it is created as being either Level 1 or Level 2. It is effectively just a bit of metadata on the Recommendation which End Users can filter their view of the Benchmark.

Editing one of the existing Level 2 Recommendations in Workbench

At the moment, only one Profile is tagged per Recommendation. But we can support multiple Profiles for each.

A Recommendation will still only be Level 1 or Level 2 to allow filtering between the two, but will also now be tagged with one or more of the following in a hierarchical fashion:

Junos Common - Recommendations that apply to all Junos Devices

Router - Adds generic Router specific Recommendations (eg. the ProtectRE Filter on Lo0 and how it applies to Fxp0)

MX - Adds any MX Specific Recommendations that would not apply to other routers

PTX - Adds any PTX Specific Recommendations

ACX - Adds any ACX Specific Recommendations

Switch - Adds generic Switch specific Recommendations (eg. how the ProtectRE filter on Lo0 does not apply to Me0)

EX - Adds any EX Specific Recommendations that would not apply to other switches

QFX - Adds any QFX Specific Recommendations that would not apply to other switches

Firewall - Adds generic Firewall specific Recommendations (eg. restrictions on Host-Inbound-Traffic in Security Zones)

SRX - the only supported Firewall type at present, so effectively the same as generic Firewall type, the hierarchy is created to allow any future platforms to be supported.

Unlike the Level 1/Level 2 (which remain either/or), these new Profiles will be additive.

So a Recommendation like 6.10.6 Ensure Telnet is Not Set which would apply at Level 1 to all types of Junos device would be tagged with the Level 1 Profile and with all of the above Profiles.

While a new, Firewall specific, Recommendation such as Ensure that Default Security Policy is Not Set to Permit (which seems an eminently sensible candidate now we’re adding SRX specific configuration) would be tagged with Level 1, Firewall and SRX only (the duplication of Firewall and SRX some level of support to other Juniper Firewalls, such as the now EoL LN Series or some future firewall without needing another major overhaul and restructuring).

From the Editor/Communities perspective - this allows us to account for platform specific differences, and support for new/unlisted platforms, while handling the commonality in a manageable way.

Taking the example of the ProtectRE Firewall Filter again. This would not go in the Junos Common profile, even though some kind of filter is needed for all platforms.

Instead of one, very complicated, Recommendation which explains all the caveats and platform specific choices - we would have three separate versions of this Recommendation:

Ensure a “Protect RE” Firewall Filter is applied to Lo0 - based on the current Recommendation would be in the Level 1, Router, MX, PTX and ACX Profiles, but not in Junos Common or any others. This would apply to In-Band and OOB Management (via Fxp0).

Ensure “Protect RE” Firewall Filters are applied to Lo0 and Me0 - with specific information about the normal filter on Lo0 not applying to Me0 would be in the Level 1, Switch, EX and QFX Profiles - but not the others.

Ensure “Protect RE” Firewall Filter is set if Fxp0 is used - would include information about protecting SRX Firewalls for OOB Management via Fxp0 (with separate Recommendations covering Host-Inbound-Traffic and junos-host Security Policy for In Band). This would be in the Level 1, Firewall and SRX Profiles only.

With this structure in place, the End User only needs to select two Profiles:

The Level they want to Secure to, just as at present.

The type of device they want to secure.

For the type of device, they might select the generic “type” (such as Router) or they can choose the specific series (such as MX) - for most Recommendations the results would be identical, but this allows us to add series specific recommendations where appropriate (for example around securing hardware or media types that one platform supports but the others do not).

Any Other Profiles?

For Version 3.0.0, I don’t think there will be a need for any more. The aim at this stage is to account for Platform divergence, not for different deployment types. However, the final decision on how the Profiles are structured and what is or is not included will, as always, be down to the Juniper Benchmark Community.

In future updates (perhaps for 3.1 or similar) we might look to add support from different deployment types or roles by extending the existing hierarchy a bit further.

For example, how you may typically configure and secure Edge Ports on an Enterprise Access Switch vs a Data Centre Access Switch/Leaf Node is going to differ quite significantly - but you might use an EX Series (or a QFX) in either of these Roles.

So potentially there could be scope to extend the device hierarchies to support this in the future, something like the following might be added for the Switches (for example) :

Junos Common - Recommendations that apply to all Junos Devices

Switch - Adds generic Switch specific Recommendations (eg. how the ProtectRE filter on Lo0 does not apply to Me0)

EX - Adds any EX Specific Recommendations that would not apply to other switches

EX Access Switch - Adds Port Security, Dot1x, etc appropriate to the Access Switch Role

EX Core/Distribution Switch - Adds things like STP Root Protection, etc

EX Data Center Edge Switch - Adds different Port Security and access requirements.

QFX - Adds any QFX Specific Recommendations that would not apply to other switches

QFX Fabric Switch - Adds EVPN, VCF or other Fabric related options

QFX Data Center Edge Switch - Adds appropriate Port Security requirements.

Within Routers you could see a similar type of Role dependant addition for things roles like CPE, PE, Metro and so on.

However, whether this additional set of profiles proves necessary or not remains to be seen. We handle a lot of this role diversity by virtue of having recommendations that apply if certain features are configured.

So if EVPN is configured apply the EVPN related security options, if VCF is configured, apply any VCF related security options…. so some of these Role Profiles could appear in the future, but perhaps not many. Or perhaps not at all.

Thanks

CIS Juniper OS Benchmark

Version 2.1.0

New to CIS Benchmarks?

Check out this talk that I gave at Bristech in 2018.

(note - CIS Controls have been updated to Ver 8 since this video was recorded)

Help write the CIS Benchmarks - Gain experience and earn CPEs - Learn More

![[aUseful.com]](http://images.squarespace-cdn.com/content/v1/5bbb7c34da50d36fae638f8a/1540386803830-TVD3ZDYRR24E8DYXNO44/aUsefulLOGO.jpg)